Apache Camel has so many ways of making your life easier; here’s one.

I needed to import a fixed-format file, the kind of thing that reminds you to hug XML and even give JSON a break every so often. In this case, I was importing the Yale “Bright Star Catalogue“, featuring a load of numbers about all the visible stars, about 9000 all told. Not a huge database, but a pain to parse, like all fixed format data, and I needed output in XML.

I looked at what Camel had to offer and came across the BeanIO component, which handles CSV, Fixed and XML formats. Now this immediately made life easier, for a start there’s an external XML mapping file to tell the parser what fields to expect and what to do (for all the options, see here). Here’s the first few fields in my star data :

<?xml version="1.0" encoding="UTF-8"?>

<beanio xmlns="http://www.beanio.org/2012/03" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://www.beanio.org/2012/03

http://www.beanio.org/2012/03/mapping.xsd">

<stream name="stars" format="fixedlength">

<record name="star" class="java.util.HashMap">

<field name="HR" length="4" trim="true" />

<field name="NAME" length="10" trim="true" />

<field name="DM" length="11" trim="true" />

<field name="HD" length="6" trim="true" />

</record>

</stream>

I’m using vanilla Spring XML in my Camel, so I’ve no class to map my data to, hence I’m using HashMap, but if you’ve got one, your class goes in the record. Also, as I’m hoping for XML output, I’m trimming each field so I don’t get a file full of spaces.

BeanIO can run as either a DataFormat, or as a Component, I’m using the former. Now al I needed was a folder to put the file in and a bit of Camel:

<dataFormats> <beanio id="stars" mapping="mapping.xml" streamName="stars" ignoreUnexpectedRecords="true"/> </dataFormats>

<route> <from uri="file:inbox?noop=true"/> <split streaming="true" parallelProcessing="true"> <tokenize token="\r\n|\n" xml="false" trim="true" /> <to uri="direct:stars"/> </split> </route>

<route> <from uri="direct:stars"/> <unmarshal ref="stars"/> </route>

This is standard stuff, the dataFormat section points to the mapping file and tells it what stream definition I want from it, then I split the file, send each line on and “unmarshal” it into a HashMap using that definition.

Now at this point I was fairly happy, the split was simple, but I was still faced with having to create some sort of Groovy bean to assemble the HashMap into the XML I wanted. I actually started down that road and then came across the following in the docs:

Our original mapping file from Section 2.1 can now be updated to parse XML instead of CSV with only two minor changes. First, the stream format is changed to xml. And second, the hire date field format is removed…

Lightbulb moment. All I needed was to add a second steam format using the fields I wanted in my XML, and BeanIO would “marshall” it for me. No bean, no mess no fuss. Again there’s a load of options, you can rename elements, make some things attributes, change format. I just needed the plain version with a couple of tweaks to suppress the <?xml… header and format the output just for readability’s sake:

<stream name="stars2" format="xml" xmlType="none"> <parser> <property name="suppressHeader" value="true" /> <property name="indentation" value="2" /> </parser> <record name="star" class="java.util.HashMap"> <field name="HR" /> <field name="NAME" /> <field name="DM" /> <field name="HD" /> </record> </stream>

Now I just need to add in the DataFormat and mod my route a little so my filename comes from the data:

<dataFormats>

<beanio id="stars" mapping="mapping.xml" streamName="stars"

ignoreUnexpectedRecords="true"/>

<beanio id="stars2" mapping="mapping.xml" streamName="stars2"/>

</dataFormats>

<route>

<from uri="file:inbox?noop=true"/>

<split streaming="true" parallelProcessing="true">

<tokenize token="\r\n|\n" xml="false" trim="true" />

<to uri="direct:stars"/>

</split>

</route>

<route>

<from uri="direct:stars"/>

<unmarshal ref="stars"/>

<setHeader headerName="CamelFileName">

<simple>${body[0].get('HD')}.xml</simple>

</setHeader>

<marshal ref="stars2"/>

<to uri="file:filebox"/>

</route>

That’s it, 9000 XML files in a few lines of configuration:

<star>

<HR>3</HR>

<NAME>33 Psc</NAME>

<DM>BD-06 6357</DM>

<HD>28</HD>

</star>

Now the neat thing about this, is that the is file is one of dozens of astronomy data files – many in fixed format. So the same code, add a new stream to the mapping file and you’re parsing out the “ACT Reference Catalog ” of 100,000 stars.

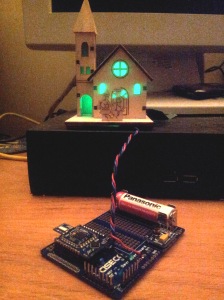

I’ve left the Arduino/ RFµ328 side out of this post – it’s easy enough to get something like this with a few lines of code and a bit of soldering:

I’ve left the Arduino/ RFµ328 side out of this post – it’s easy enough to get something like this with a few lines of code and a bit of soldering: